Claude 3 LLM's Advanced Context Detection Abilities Revealed

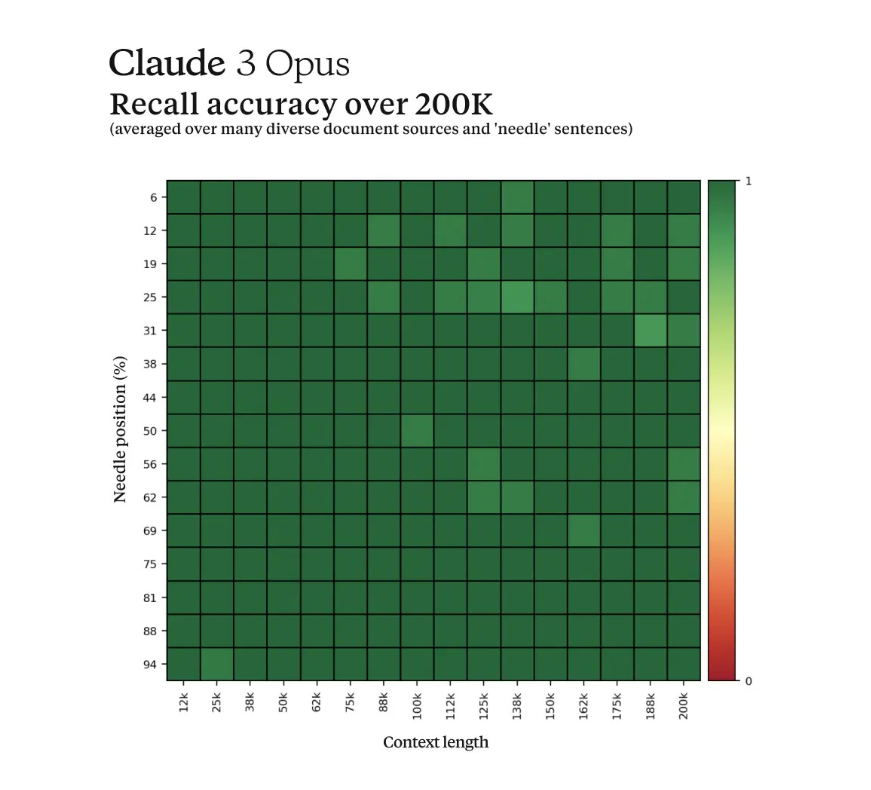

Anthropic's new LLM, Claude 3 Opus, was put to the test in an evaluation technique called "Needle in a Haystack," where it successfully retrieved a random statement buried within unrelated documents. This exercise highlighted the model's cognitive skills and ability to understand context, make inferences, and retrieve precise information from vast data sets.

The evaluation scenario involved inserting a trivial statement about pizza toppings into documents covering complex topics like software programming and career strategies. Despite the incongruity, Claude 3 Opus effectively located the out-of-context fact, showcasing its advanced language processing capabilities.

Personalizar Resumo

Reescrever com IA

Gerar Citações

Traduzir Fonte

Para outro idioma

Gerar Mapa Mental

do conteúdo fonte

Visitar Fonte

medium.com

“I think you’re testing me”: Claude 3 LLM called out creators while they probed its limits

Principais Insights Extraídos De

by Mike Young às medium.com 03-05-2024

https://medium.com/@mikeyoung_97230/i-think-youre-testing-me-claude-3-llm-called-out-creators-while-they-probed-its-limits-399d2b881702

Perguntas Mais Profundas