Detecting Fabricated Outputs in Large Language Models Using Semantic Entropy

The content discusses the problem of hallucinations in large language models (LLMs) such as ChatGPT and Gemini. LLMs can sometimes generate false or unsubstantiated answers, which poses a significant challenge for their adoption in diverse fields, including legal, news, and medical domains.

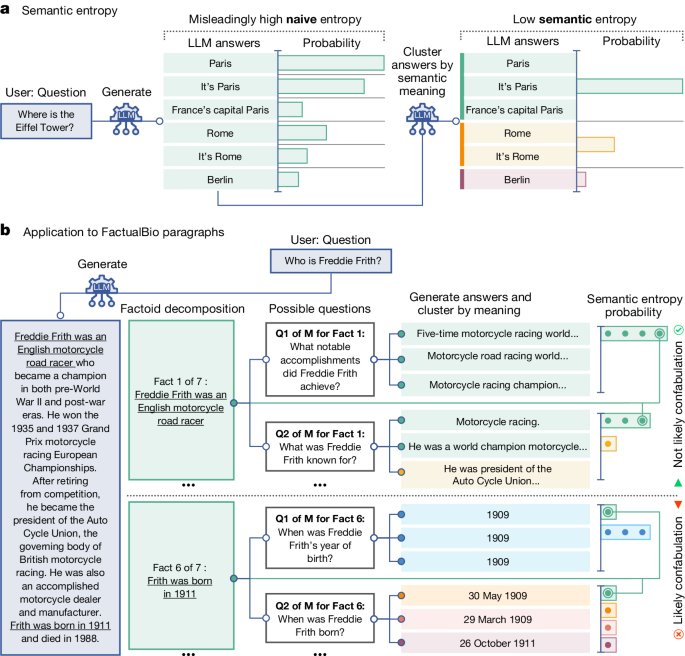

The authors propose a new method to detect a subset of hallucinations, called "confabulations", which are arbitrary and incorrect generations. The key idea is to compute the uncertainty of the model's outputs at the level of meaning rather than specific sequences of words, using an entropy-based approach.

The proposed method has several advantages:

- It works across datasets and tasks without requiring a priori knowledge of the task or task-specific data.

- It can robustly generalize to new tasks not seen before.

- By detecting when a prompt is likely to produce a confabulation, the method helps users understand when they must take extra care with LLMs, enabling new possibilities for using these models despite their unreliability.

The authors highlight that encouraging truthfulness through supervision or reinforcement has only been partially successful, and a general method for detecting hallucinations in LLMs is needed, even for questions to which humans might not know the answer.

Настроить сводку

Переписать с помощью ИИ

Создать цитаты

Перевести источник

На другой язык

Создать интеллект-карту

из исходного контента

Перейти к источнику

www.nature.com

Detecting hallucinations in large language models using semantic entropy - Nature

Ключевые выводы из

by Sebastian Fa... в www.nature.com 06-19-2024

https://www.nature.com/articles/s41586-024-07421-0

Дополнительные вопросы