Enhancing Language Models with Retrieval-Augmented Generation (RAG) 2.0

แนวคิดหลัก

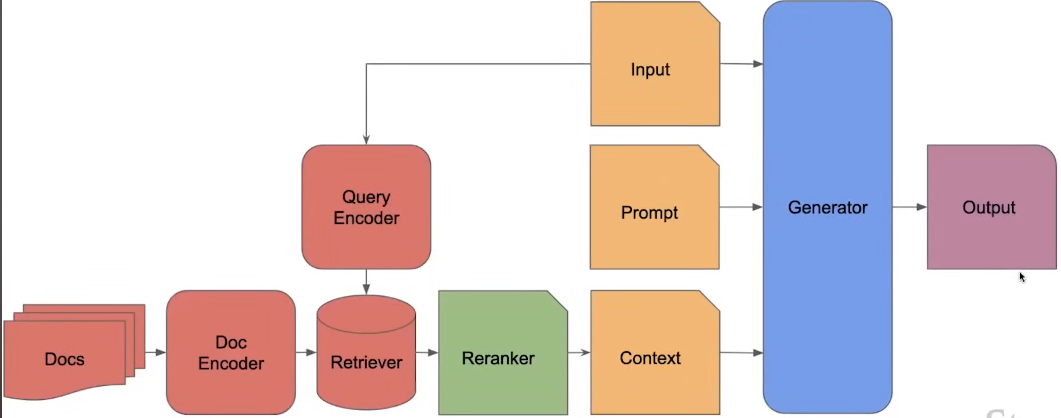

Retrieval-Augmented Generation (RAG) is a technique to enhance language models by providing additional context, enabling them to generate more specific and informative responses.

บทคัดย่อ

The article discusses the concept of Retrieval-Augmented Generation (RAG) and introduces RAG 2.0, which aims to address the shortcomings of current RAG pipelines.

The key points are:

- Language models have made significant progress, but they still have important limitations, such as the inability to answer specific queries due to a lack of context.

- RAG is a technique that provides additional context to language models, allowing them to generate more accurate and informative responses.

- The problem with current RAG pipelines is that the different submodules (retriever and generator) are not fully integrated and optimized to work together, resulting in suboptimal performance.

- RAG 2.0 aims to address these issues by creating models with trainable retrievers, where the entire RAG pipeline can be customized and fine-tuned like a language model.

- The article discusses the potential benefits of RAG 2.0, including better retrieval strategies, the use of state-of-the-art retrieval algorithms, and the ability to contextualize the retriever for the generator.

- The author emphasizes the importance of combining the contextualized retriever and generator to achieve state-of-the-art performance in retrieval-augmented language models.

ปรับแต่งบทสรุป

เขียนใหม่ด้วย AI

สร้างการอ้างอิง

แปลแหล่งที่มา

เป็นภาษาอื่น

สร้าง MindMap

จากเนื้อหาต้นฉบับ

ไปยังแหล่งที่มา

medium.com

RAG 2.0: Retrieval Augmented Language Models

สถิติ

No specific data or metrics were provided in the content.

คำพูด

No direct quotes were extracted from the content.

ข้อมูลเชิงลึกที่สำคัญจาก

by Vishal Rajpu... ที่ medium.com 04-16-2024

https://medium.com/aiguys/rag-2-0-retrieval-augmented-language-models-3762f3047256

สอบถามเพิ่มเติม

What are the specific challenges and limitations of current RAG pipelines that RAG 2.0 aims to address?

Current RAG pipelines face challenges such as lack of harmony and optimization within their submodules, resulting in suboptimal performance. The components of existing RAG models, like the retriever and generator, do not work seamlessly together, akin to a Frankenstein monster. RAG 2.0 aims to address these limitations by introducing trainable retrievers, allowing for customization of the entire RAG pipeline. By enhancing the integration and coordination between the retriever and generator components, RAG 2.0 seeks to overcome the shortcomings of current RAG models and improve overall performance.

How can the integration and optimization of the retriever and generator components in RAG 2.0 lead to improved performance compared to existing approaches?

Integrating and optimizing the retriever and generator components in RAG 2.0 can lead to improved performance through enhanced synergy and coordination between the two modules. By customizing retrievers and fine-tuning the entire RAG pipeline, RAG 2.0 can ensure that the components work in harmony, resulting in more efficient information retrieval and generation processes. This optimized integration allows for better contextualization of retrieved information for the generator, leading to more accurate and specific responses. Overall, the improved coordination between the retriever and generator in RAG 2.0 enhances the model's ability to generate relevant and high-quality outputs compared to existing approaches.

What are the potential applications and use cases of retrieval-augmented language models, and how might RAG 2.0 expand the capabilities of these models?

Retrieval-augmented language models have various applications, including question-answering, information retrieval, and content generation. These models can be used in chatbots, search engines, and content recommendation systems to provide more accurate and contextually relevant responses. RAG 2.0 expands the capabilities of retrieval-augmented language models by introducing trainable retrievers and customizable pipelines. This advancement allows for more tailored and specific information retrieval, leading to enhanced performance in tasks requiring contextual understanding. With RAG 2.0, the potential applications of retrieval-augmented language models are broadened, enabling more sophisticated and accurate responses in diverse use cases across different domains.

0