Scaling Neural Machine Translation to 200 Languages: Overcoming Challenges and Achieving Significant Improvements in Translation Quality

Core Concepts

A single massively multilingual neural machine translation model that leverages transfer learning across languages to achieve significant improvements in translation quality across 200 languages, including low-resource languages.

Abstract

The content discusses the development of a novel neural machine translation (NMT) system that can scale to 200 languages, including low-resource languages. The key points are:

The development of neural techniques has opened up new avenues for research in machine translation, enabling highly multilingual capacities and even zero-shot translation.

However, scaling quality NMT requires large volumes of parallel bilingual data, which are not equally available for the 7,000+ languages in the world, leading to a focus on improving translation for a small group of high-resource languages and exacerbating digital inequities.

To address this, the authors introduce "No Language Left Behind" - a single massively multilingual model that leverages transfer learning across languages.

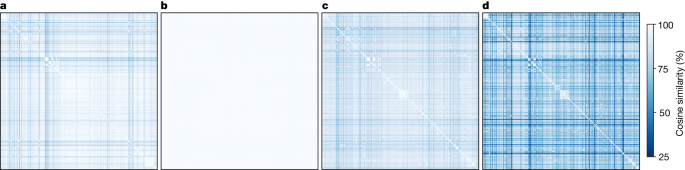

The model is based on the Sparsely Gated Mixture of Experts architecture and is trained on data obtained using new mining techniques tailored for low-resource languages.

The authors also devised multiple architectural and training improvements to counteract overfitting while training on thousands of tasks.

The model was evaluated using an automatic benchmark (FLORES-200), a human evaluation metric (XSTS), and a toxicity detector, and achieved an average of 44% improvement in translation quality as measured by BLEU compared to previous state-of-the-art models.

By demonstrating how to scale NMT to 200 languages and making all contributions freely available for non-commercial use, the work lays important groundwork for the development of a universal translation system.

Scaling neural machine translation to 200 languages - Nature

Stats

The model was evaluated on 40,000 translation directions.

The model achieved an average of 44% improvement in translation quality as measured by BLEU compared to previous state-of-the-art models.

Quotes

"To break this pattern, here we introduce No Language Left Behind—a single massively multilingual model that leverages transfer learning across languages."

"By demonstrating how to scale NMT to 200 languages and making all contributions in this effort freely available for non-commercial use, our work lays important groundwork for the development of a universal translation system."

Key Insights Distilled From

by Mart... at www.nature.com 06-05-2024

https://www.nature.com/articles/s41586-024-07335-x

Deeper Inquiries

What are the specific architectural and training improvements that the authors devised to counteract overfitting while training on thousands of tasks?

The authors implemented several architectural and training improvements to address overfitting while training on numerous tasks. One key improvement was the utilization of the Sparsely Gated Mixture of Experts architecture, which allowed for more efficient learning across languages. Additionally, they tailored new mining techniques specifically for low-resource languages to gather the necessary data for training. To counteract overfitting, the authors introduced multiple architectural enhancements and training strategies. These strategies likely included regularization techniques such as dropout, weight decay, and early stopping to prevent the model from memorizing the training data and improve generalization to unseen data. By carefully designing the architecture and implementing these training improvements, the authors were able to enhance the model's performance and mitigate overfitting issues effectively.

How can the techniques used in this work be extended to improve translation quality for even lower-resource languages beyond the 200 covered in this study?

The techniques employed in this study can be extended to enhance translation quality for languages with even fewer available resources beyond the 200 covered. One approach could involve further refining the data mining techniques tailored for low-resource languages to gather more parallel bilingual data. Additionally, transfer learning methods could be leveraged to adapt the model trained on the 200 languages to new, lower-resource languages. By fine-tuning the pre-trained model on the limited data available for these languages, it may be possible to improve translation quality significantly. Moreover, collaborative efforts with linguistic experts and local communities could help in collecting and annotating data for these languages, enabling the model to learn more effectively. By continuing to innovate in data collection, transfer learning, and model adaptation, it is feasible to extend the techniques used in this study to enhance translation quality for a broader range of languages, including those with extremely limited resources.

What are the potential societal and economic implications of developing a truly universal translation system that can serve the needs of the world's 7,000+ languages?

The development of a universal translation system capable of serving all 7,000+ languages could have profound societal and economic implications. From a societal perspective, such a system could facilitate greater cross-cultural communication and understanding, breaking down language barriers and fostering global collaboration. It could empower marginalized communities and indigenous language speakers by providing them with a platform to share their knowledge and culture with the world. Additionally, improved access to information and resources through accurate translation could contribute to educational advancement and cultural preservation across diverse linguistic groups.

On an economic level, a universal translation system could open up new markets and opportunities for businesses to engage with a global audience. It could streamline international trade, negotiations, and collaborations by enabling seamless communication across languages. Furthermore, the increased efficiency in translation services could lead to cost savings and improved productivity for organizations operating in multilingual environments. Overall, the development of a universal translation system has the potential to drive social inclusion, economic growth, and innovation on a global scale, benefiting individuals, communities, and economies worldwide.

0