Unlocking the Secrets of Human Decision-Making: Insights from "Thinking Fast and Slow"

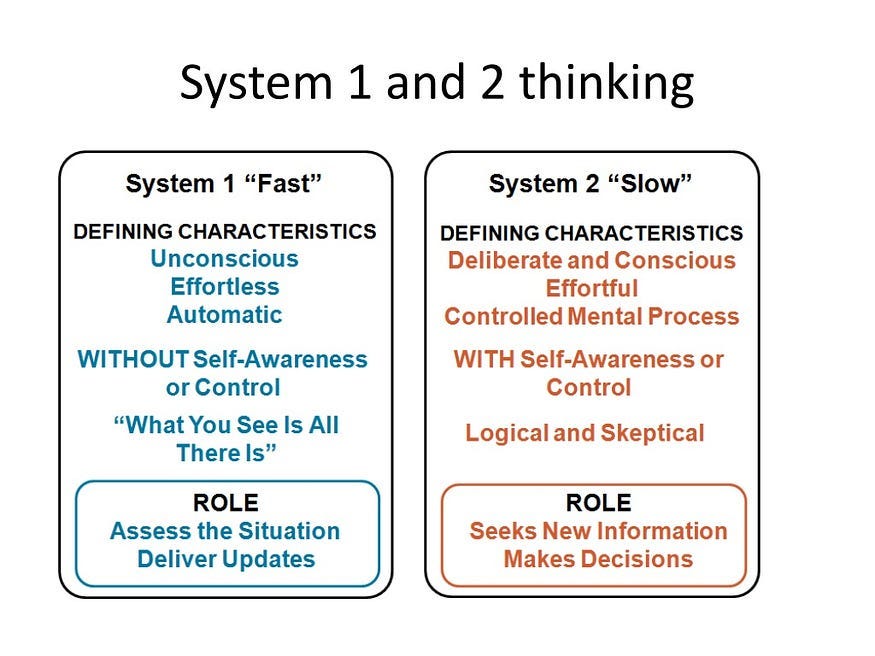

The content provides an in-depth overview of the key insights from the book "Thinking Fast and Slow" by Nobel Laureate Daniel Kahneman. It explains the two systems of thinking - System 1 (fast, automatic, and emotional) and System 2 (slow, effortful, and logical) - and how they interact to shape our decision-making processes.

The author highlights several important cognitive biases that arise from this dual-system architecture, including confirmation bias, framing effects, availability heuristic, anchoring bias, and representativeness heuristic. These biases can lead to systematic errors in our judgments and decisions, even when we believe we are being rational.

The content emphasizes the importance of understanding these biases and their implications, particularly in a world dominated by rapid information and instantaneous decision-making. It encourages readers to be more mindful of their automatic responses and to actively engage System 2 to make better choices.

The author also shares personal insights and experiences on how reading "Thinking Fast and Slow" has helped them become more aware of cognitive biases and how they are exploited in various contexts, such as marketing, AI communications, and risk assessment.

Customize Summary

Rewrite with AI

Generate Citations

Translate Source

To Another Language

Generate MindMap

from source content

Visit Source

machine-learning-made-simple.medium.com

What I learned from Thinking Fast and Slow

Key Insights Distilled From

by at machine-learning-made-si... 04-01-2024

https://machine-learning-made-simple.medium.com/what-i-learned-from-thinking-fast-and-slow-2adb4b952859

Deeper Inquiries