Facebook's Role in Radicalizing Users Revealed

Core Concepts

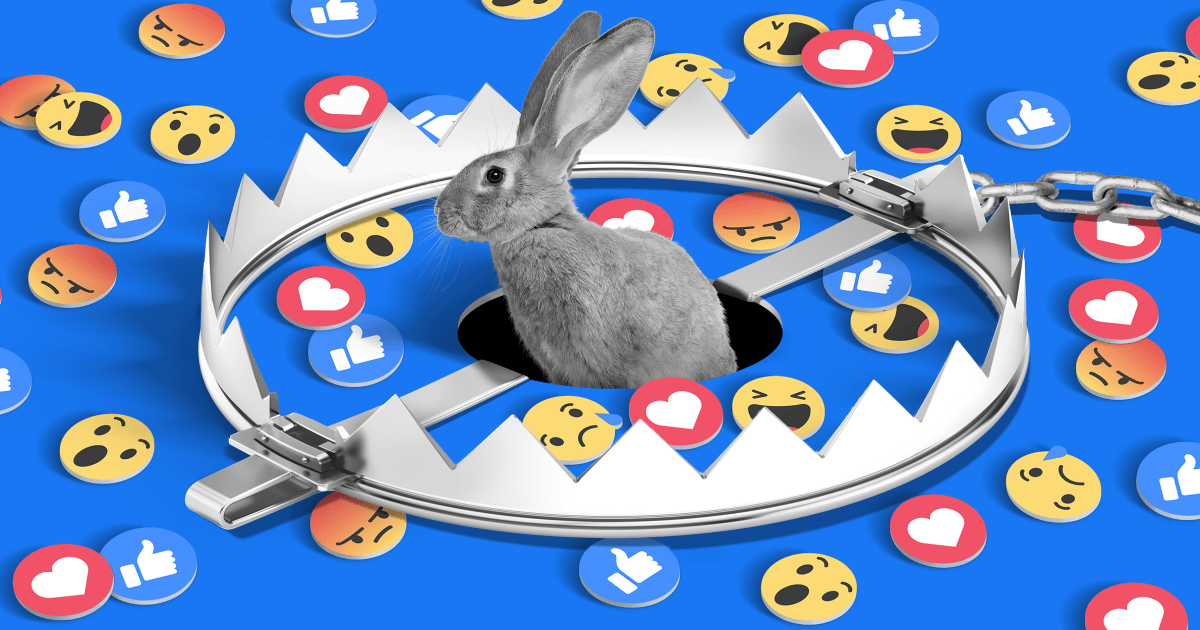

The author argues that Facebook's algorithms and recommendation systems have been pushing users towards extremist groups, leading to radicalization and the spread of conspiracy theories. The main thesis is that Facebook's permissiveness with harmful content poses a significant threat to public safety and democracy.

Abstract

The content delves into a study conducted by Facebook researchers using a fictitious user named Carol Smith to analyze how the platform radicalizes users. Despite not showing interest in conspiracy theories, Carol was recommended QAnon groups within days, highlighting the algorithm's influence. The research revealed that Facebook's recommendation systems led some users down "rabbit holes" of violent conspiracy theories, raising concerns about public safety and democracy. The findings also shed light on how anti-vaccine groups and disinformation agents took advantage of Facebook's permissiveness, further threatening societal well-being.

'Carol's Journey': What Facebook knew about how it radicalized users

Stats

Within one week, Smith’s feed was full of groups and pages that had violated Facebook’s own rules.

By summer 2020, Facebook was hosting thousands of private QAnon groups and pages.

Drebbel identified 5,931 QAnon groups with 2.2 million total members.

For 913 anti-vaccination groups with 1.7 million members, the study identified 1 million had joined via gateway groups.

Quotes

"We should be concerned about people affected by both problems."

"Facebook literally helped facilitate a cult."

"There is great hesitancy to proactively solve problems."

Key Insights Distilled From

by Https at www.nbcnews.com 03-04-2024

https://www.nbcnews.com/tech/tech-news/facebook-knew-radicalized-users-rcna3581

Deeper Inquiries

What measures can social media platforms like Facebook take to prevent the radicalization of users?

Social media platforms like Facebook can implement several measures to prevent the radicalization of users. Firstly, they can enhance their algorithms to detect and limit the spread of extremist content, conspiracy theories, and hate speech. By prioritizing credible sources and fact-checking information before it is disseminated on the platform, they can reduce the likelihood of users being exposed to harmful ideologies.

Secondly, platforms can invest in more robust moderation systems that swiftly remove or flag content that violates community standards. This includes monitoring groups and pages known for promoting extremism and taking decisive action against them.

Moreover, social media companies should prioritize transparency by providing users with clear information about how their data is used for recommendations and ensuring that algorithms are not inadvertently amplifying divisive or radicalizing content.

Lastly, collaboration with experts in psychology, sociology, and extremism studies could help these platforms better understand the mechanisms behind radicalization online and develop targeted interventions to counteract them effectively.

How can society balance freedom of expression with the need to curb harmful content online?

Balancing freedom of expression with curbing harmful content online requires a nuanced approach that considers both individual rights and societal well-being. One way to achieve this balance is through clear guidelines on acceptable behavior within digital spaces. Platforms should enforce policies that prohibit harassment, incitement to violence, hate speech, and misinformation while still allowing for diverse viewpoints.

Education plays a crucial role in empowering individuals to critically evaluate information they encounter online. Promoting digital literacy skills among users helps them discern between credible sources and misinformation while fostering healthy discourse in online communities.

Regulatory frameworks can also play a significant role in holding social media companies accountable for moderating harmful content effectively. Governments may need to establish laws that outline responsibilities for platforms regarding user safety without infringing on free speech rights unnecessarily.

In what ways can individuals protect themselves from falling into extremist online communities?

Individuals have agency in protecting themselves from falling into extremist online communities by practicing critical thinking skills when engaging with digital content. They should verify information from multiple reliable sources before accepting it as true rather than relying solely on social media feeds or echo chambers.

Maintaining a diverse network of connections across different perspectives helps individuals avoid getting trapped in ideological bubbles where extreme views are normalized. Actively seeking out reputable news sources outside of social media platforms also broadens one's understanding of complex issues beyond sensationalized narratives often found in extremist circles.

Setting boundaries around screen time and consciously limiting exposure to inflammatory or polarizing content reduces susceptibility to radicalization tactics employed by malicious actors online.

0