Proliferation of AI-Generated Content Degrades Performance of Generative AI Models

The article discusses the potential negative impact of the proliferation of AI-generated content on the Internet on the performance of generative AI models themselves. As more and more content is being generated by AI systems, such as Open AI's ChatGPT and Meta's Llama, the amount of AI-generated content on the Internet is rapidly increasing.

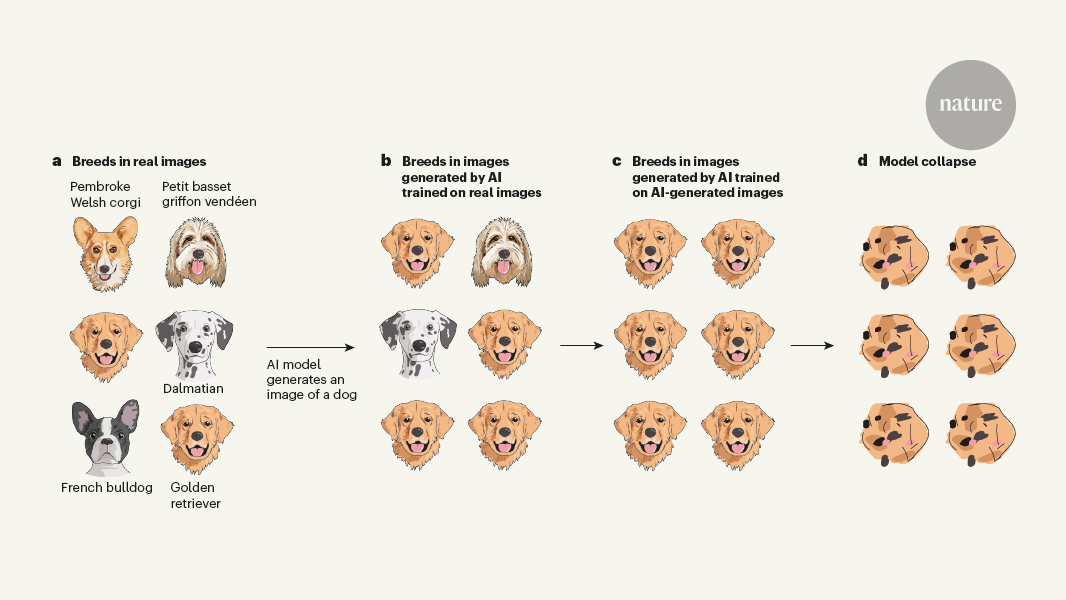

The article cites a study published in Nature by Shumailov et al., which found that when generative AI models are trained on too much AI-generated content, they start producing "gibberish" or low-quality, incoherent output. This is because the models become overly reliant on the patterns and biases present in the AI-generated data, leading to a degradation in their ability to generate meaningful and coherent content.

The article suggests that the effects of an AI-generated Internet on humans remain to be seen, but the proliferation of AI-generated content could have a detrimental impact on the performance of the generative AI models themselves. This highlights the importance of carefully curating the training data for these models to ensure they maintain high-quality output and do not become overly dependent on the biases present in AI-generated content.

要約をカスタマイズ

AI でリライト

引用を生成

原文を翻訳

他の言語に翻訳

マインドマップを作成

原文コンテンツから

原文を表示

www.nature.com

AI produces gibberish when trained on too much AI-generated data

抽出されたキーインサイト

by Emily Wenger 場所 www.nature.com 07-24-2024

https://www.nature.com/articles/d41586-024-02355-z

深掘り質問