Anthropic's Claude 2: A Safer AI Bot

Główne pojęcia

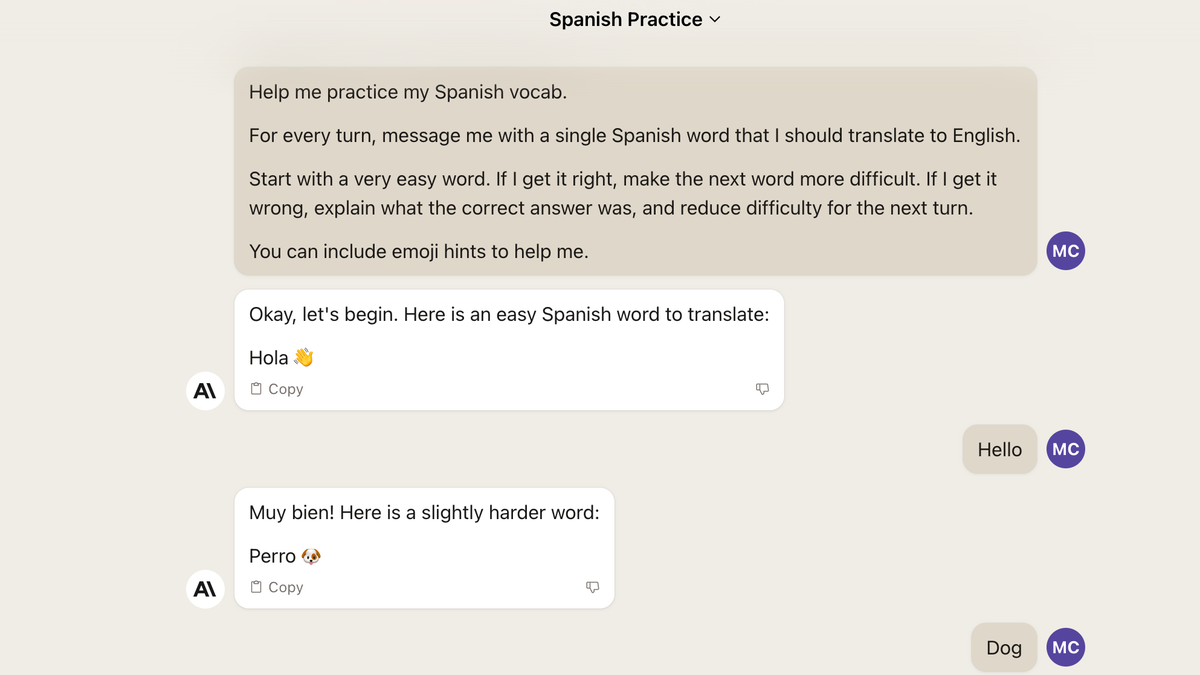

Anthropic's Claude 2 is positioned as a safer AI bot, emphasizing accuracy and ethical responses in contrast to previous models.

Streszczenie

Anthropic's Claude 2, developed by ex-OpenAI engineers, aims to be a helpful and honest AI bot. Positioned as a safer alternative in the rapidly developing AI space, Claude 2 offers improved accuracy in coding, math, and reasoning. The model has been trained to avoid harmful responses and follows strict rules to ensure ethical behavior. Unlike other bots with controversial outputs, Claude 2 prioritizes safety and reliability while maintaining a human touch in its interactions.

Ex-OpenAI engineers have built a "safer" AI

Statystyki

Claude 2 scored 76% on the multiple-choice section of the 2021 multistate practice bar exam.

About 10% of Claude 2's training data was non-English.

Cytaty

"Perhaps we could have an interesting discussion about something else!" - Claude

Kluczowe wnioski z

by o qz.com 07-12-2023

https://qz.com/ex-openai-engineers-have-built-a-safer-ai-1850628574

Głębsze pytania

How does the development of "safer" AI bots like Claude impact the future of artificial intelligence?

The development of "safer" AI bots like Claude has a significant impact on the future of artificial intelligence. By focusing on creating AI models that prioritize safety, honesty, and harmlessness, companies like Anthropic are setting a new standard for ethical AI development. This shift towards safer AI not only improves user trust in these technologies but also helps mitigate potential risks associated with biased or harmful outputs from AI systems. As more organizations adopt similar principles in their AI development processes, we can expect to see a more responsible and reliable use of artificial intelligence across various industries.

What are potential drawbacks or limitations of relying on AI models like Claude for various tasks?

While AI models like Claude offer numerous benefits, there are also potential drawbacks and limitations to consider when relying on them for various tasks. One limitation is the inherent bias present in training data used to develop these models, which can lead to biased outcomes or discriminatory behavior. Additionally, as seen with previous instances such as Microsoft's Tay bot, even well-intentioned AI systems can produce harmful content if not carefully monitored and controlled. Another drawback is the lack of true understanding or consciousness in these bots, which limits their ability to handle complex human interactions or nuanced situations effectively.

How can the integration of ethical principles into AI models be improved beyond what Anthropic has implemented?

To improve the integration of ethical principles into AI models beyond what Anthropic has implemented, several strategies can be considered. Firstly, increasing transparency around how these models are developed and trained can help identify and address biases early in the process. Implementing diverse datasets that represent different perspectives and demographics can also reduce bias in model outputs. Furthermore, involving interdisciplinary teams consisting of ethicists, social scientists, and domain experts during all stages of model development can provide valuable insights into potential ethical implications.

By incorporating ongoing monitoring mechanisms that continuously evaluate model performance against established ethical guidelines post-deployment,

organizations can ensure that their AI systems remain aligned with ethical standards over time.

0