Reference Architecture for Emerging LLM Applications

Khái niệm cốt lõi

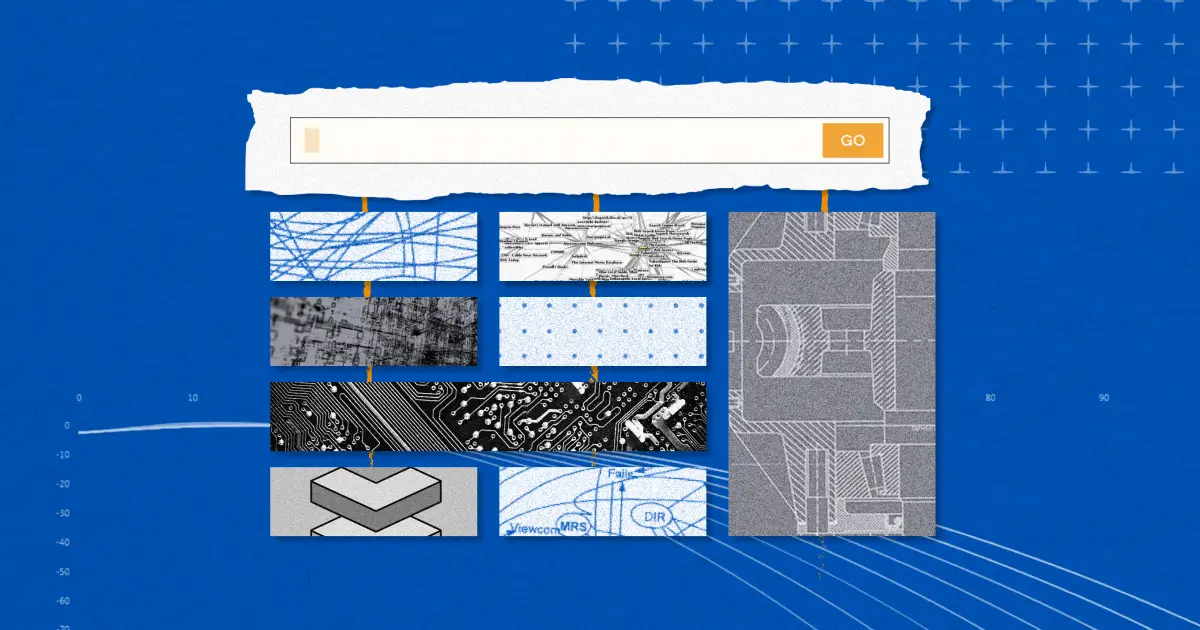

Large language models are a powerful tool for software development, with in-context learning being a key design pattern. The author argues that using pre-trained LLMs with clever prompting and contextual data control is more efficient than fine-tuning models.

Tóm tắt

The content discusses the reference architecture for emerging Large Language Model (LLM) applications, focusing on in-context learning as a key strategy. It outlines the workflow of in-context learning, emphasizing the use of pre-trained LLMs controlled through clever prompts and contextual data. The post highlights the importance of efficient prompt construction and retrieval, along with the role of vector databases in storing and retrieving embeddings. Additionally, it explores different tools and frameworks used by developers to enhance LLM applications, such as orchestration frameworks like LangChain and operational tools for model validation. The article also touches on future trends like agent frameworks and the evolving landscape of open-source LLM models.

Emerging Architectures for LLM Applications | Andreessen Horowitz

Thống kê

The biggest GPT-4 model can only process ~50 pages of input text.

A single GPT-4 query over 10,000 pages would cost hundreds of dollars at current API rates.

AutoGPT was described as "an experimental open-source attempt to make GPT-4 fully autonomous."

AutoGPT was the fastest-growing Github repo in history this spring.

Trích dẫn

"In-context learning solves this problem with a clever trick: instead of sending all the documents with each LLM prompt, it sends only a handful of the most relevant documents."

"Most developers start new LLM apps using the OpenAI API, usually with the gpt-4 or gpt-4-32k model."

"Agents have the potential to become a central piece of the LLM app architecture."

Thông tin chi tiết chính được chắt lọc từ

by Matt Bornste... lúc a16z.com 06-20-2023

https://a16z.com/emerging-architectures-for-llm-applications/

Yêu cầu sâu hơn

How might advancements in agent frameworks impact the future development of LLM applications

Advancements in agent frameworks have the potential to significantly impact the future development of LLM applications by introducing a new set of capabilities that go beyond what traditional language models offer. Agents can provide AI apps with advanced reasoning, planning, tool usage, and memory functionalities. They enable applications to solve complex problems, interact with the external world, and learn from experiences post-deployment. This opens up possibilities for LLM applications to not only generate content but also to act upon it in a more autonomous and intelligent manner.

While the current agent frameworks are still in the proof-of-concept phase and may not be fully reliable or reproducible for task completion yet, their integration into LLM architectures could lead to a paradigm shift in how these applications operate. As agents mature and become more capable, they could potentially take over significant parts of the existing stack or even drive recursive self-improvement within LLM systems. Therefore, as agent frameworks evolve and improve their functionality, we can expect them to play a central role in shaping the future development of LLM applications.

What are some potential drawbacks or limitations of relying heavily on pre-trained AI models like OpenAI's offerings

Relying heavily on pre-trained AI models like OpenAI's offerings comes with certain drawbacks and limitations that developers need to consider when building LLM applications:

Limited Customization: Pre-trained models have fixed architectures and parameters that may not perfectly align with specific use cases or domains. This lack of customization can limit the model's ability to adapt precisely to unique requirements.

Data Privacy Concerns: Using pre-trained models often involves sharing sensitive data with third-party providers like OpenAI for inference tasks. This raises concerns about data privacy and security since confidential information might be exposed during processing.

Dependency on External APIs: Leveraging pre-trained models typically requires continuous access to external APIs provided by organizations like OpenAI. Any disruptions or changes in API availability could impact application functionality if proper contingency plans are not in place.

Scalability Challenges: Large-scale deployment of pre-trained models across multiple users or high-volume scenarios can pose scalability challenges due to resource constraints such as computational power or latency issues during inference.

Cost Considerations: Depending solely on proprietary pre-trained models may incur significant costs over time, especially for large-scale deployments where frequent interactions with these models are necessary.

6 .Ethical Implications: Since pre-trained AI models inherit biases present in their training data, relying heavily on them without addressing bias mitigation strategies can perpetuate unfair outcomes or discriminatory behavior within applications.

How can traditional machine learning teams adapt to leverage large language models effectively in their projects

Traditional machine learning teams looking to leverage large language models effectively in their projects can adapt by incorporating several key strategies:

1 .Skill Development: Upskilling team members through training programs focused on understanding natural language processing (NLP) concepts relevant to large language model utilization is essential.

2 .Collaboration: Foster collaboration between domain experts who understand specific project requirements and ML engineers proficient at implementing large language model solutions.

3 .Experimentation: Encourage experimentation with fine-tuning open-source base models using platforms like Databricks or Hugging Face while gradually transitioning towards integrating larger proprietary offerings like GPT-4 based on performance needs.

4 .Infrastructure Optimization: Optimize infrastructure resources such as cloud computing instances based on workload demands for efficient scaling of projects utilizing large language models.

5 .Validation Tools Adoption: Implement operational tools such as Weights & Biases or PromptLayer for logging outputs from LLMs which aid in improving prompt construction processes leading towards better model performance.

6 .Adaptation Planning: Develop contingency plans considering potential shifts towards agent-based frameworks ensuring readiness for upcoming advancements impacting traditional machine learning practices related specifically to NLP tasks involving large text datasets

0